v0.6.1

February 24, 2026What's Changed

- Fixes tests by @merschformann in #43

- Fixes announcing run id of app run by @merschformann in #44

- Release v0.6.1 by @merschformann in #45

Full Changelog: v0.6.0...v0.6.1

nextmv-v1.1.2

February 18, 2026What's Changed

- Fix manifest copying with

localpackage by @sebastian-quintero in #235

nextmv-v1.1.1

February 18, 2026What's Changed

- Upgrades uv lock dependencies of nextmv-gurobipy and nextmv-scikit-learn by @merschformann in #233

- Move

alloptional deps to mandatory deps by @sebastian-quintero in #232 - Release v1.1.1 by @sebastian-quintero in #234

nextmv-v1.1.0

February 17, 2026What's Changed

- Add instance locking/unlocking to SDK and CLI by @sebastian-quintero in #227

- Adds support for Cloud Marketplace in SDK and CLI by @sebastian-quintero in #226

- Adds support for new output field on metrics, deprecates statistics by @racquesta in #216

- Adds

local appandlocal runcommand trees in the CLI by @merschformann in #218 - Fixes file copying in

localpackage by @sebastian-quintero in #228 - Supports other languages for

localexecution by @sebastian-quintero in #229 - Release v1.1.0 by @sebastian-quintero in #230

nextmv-v1.0.0

February 2, 2026What's Changed

This is our first major release of the nextmv Python SDK and IT IS A BREAKING CHANGE.

Here is a compilation of the main things that changed with this release. Please note that this list is not meant to be comprehensive and you should exercise caution when upgrading to the new version.

- Adds the

clipackage containing the Nextmv CLI. - Adds the

nextmvcommand to run the Nextmv CLI. - Changes to

cloudpackage.- (BREAKING) Removes the following imports. They should be imported directly from

nextmv.MANIFEST_FILE_NAMEManifestManifestBuildManifestContentManifestContentMultiFileManifestContentMultiFileInputManifestContentMultiFileOutputManifestOptionManifestPythonManifestPythonModelManifestRuntimeManifestTypePollingOptionspollErrorLogExternalRunResultFormatFormatInputFormatOutputMetadataRunConfigurationRunInformationRunLogRunQueuingRunResultRunTypeRunTypeConfigurationTrackedRunTrackedRunStatusrun_durationsafe_idsafe_name_and_idStatusStatusV2

- (BREAKING) Removes the

ToleranceTypeenum. - (BREAKING) Removes the

Statusenum. - Adds the

cloud.list_applicationfunction. - Adds the

cloud.ApplicationTypeenum. - Adds the

cloud.communitypackage. The following functions can be imported from thecloudpackage directly:clone_community_appandlist_community_apps.

- (BREAKING) Removes the following imports. They should be imported directly from

- Changes to

nextmvpackage.- (BREAKING) Removes support for

CSVinInputFormat. - (BREAKING) Removes the

load_localandwrite_localfunctions. - (BREAKING) Removes the

Parameterclass. - (BREAKING) Removes the

Statusenum. - (BREAKING) Removes the

parameters_dictmethod from theOptionsclass. - (BREAKING) Removes the

from_parameters_dictmethod from theOptionsclass. - (BREAKING) Removes the

statusfield from various classes likeRun. - Adds the

sleep_duration_funcfield to thePollingOptionsclass.

- (BREAKING) Removes support for

- Changes to

cloud.Applicationclass.- (BREAKING)

idandnamearguments are now optional in thenew_instancemethod. Ordering of arguments has changed. - (BREAKING)

idandnamearguments are now optional in thenew_secrets_collectionmethod. - (BREAKING)

idandnamearguments are now optional in thenew_managed_inputmethod. - (BREAKING) deprecates

new_ensemble_defintionmethod in favor ofnew_ensemble_definition(note the spelling correction). - (BREAKING)

nameargument is now optional in thenew_acceptance_testmethod. - (BREAKING)

nameargument is now optional in thenew_acceptance_test_with_resultmethod. - (BREAKING)

nameargument is now optional in thenew_batch_experimentmethod. - (BREAKING)

nameargument is now optional in thenew_batch_experiment_with_resultmethod. - (BREAKING)

idandnamearguments are now optional in thenew_scenario_testmethod. Ordering of arguments has changed. - (BREAKING)

idandnamearguments are now optional in thenew_scenario_test_with_resultmethod. Ordering of arguments has changed. - (BREAKING)

nameargument is now optional in thenewclassmethod. - (BREAKING) Removes

instance_idsargument from the following methods:new_batch_experiment,new_batch_experiment_with_result,new_scenario_test, andnew_scenario_test_with_result. - (BREAKING)

idargument is now optional in thenew_acceptance_testmethod. Ordering of arguments has changed. - (BREAKING)

idargument is now optional in thenew_acceptance_test_with_resultmethod. Ordering of arguments has changed. - (BREAKING)

idandnamearguments are now optional in thenew_input_setmethod. - Refactors the

cloud.application.pyfile and turns it into thecloud/applicationpackage. Import paths remain the same. - Adds

getclassmethod. - Adds fields like:

type,default_instance_id,default_experiment_id, and more. - Adds arguments to the

newclassmethod. - Adds

updatemethod. - Adds

run_logs_with_pollingmethod. - Adds

output_dir_pathargument to therun_inputmethod. - Adds

delete_versionmethod. - Adds

update_versionmethod. - Adds

delete_instancemethod. - Adds

upload_datamethod. Theupload_large_inputmethod is deprecated. - Adds

delete_managed_inputmethod. - Adds

statusargument to thelist_runsmethod. - Adds

new_ensemble_definitionmethod. - Adds

update_input_setmethod. - Adds

update_acceptance_testmethod. - Adds the

shadowmethods likenew_shadow_testandshadow_test. - Adds the

switchbackmethods likenew_switchback_testandswitchback_test. - Adds the

delete_input_setmethod.

- (BREAKING)

- Changes to

cloud.Accountclass.- Adds

getclassmethod. - Adds

newclassmethod. - Adds

deletemethod. - Adds

updatemethod.

- Adds

v0.6.0

January 29, 2026What's Changed

- Upgrade to nextmv 0.40.0, upgrade deprecated references, release v0.6.0 by @sebastian-quintero in #42

Full Changelog: v0.5.0...v0.6.0

nextmv-gurobipy-v0.4.5

January 21, 2026What's Changed

nextmv cloud run createcommand by @sebastian-quintero in #186- Completes the

nextmv cloud runcommand tree by @sebastian-quintero in #187 - Adds the

nextmv cloud appcommand tree by @sebastian-quintero in #188 - Creates the contributing guide. by @sebastian-quintero in #189

- Adds the

nextmv cloud versioncommand tree by @sebastian-quintero in #190 - Adds versioning to push by @sebastian-quintero in #191

- Adds

nextmv cloud uploadcommand tree by @sebastian-quintero in #192 - Adds the

nextmv cloud instancecommand tree by @sebastian-quintero in #193 - Adds the

nextmv cloud secretscommand tree by @sebastian-quintero in #194 - Refactors

cloud/application.pyfile and turns it into a directory by @sebastian-quintero in #195 - Adds the

nextmv cloud datacommand tree, deprecatesupload_large_inputby @sebastian-quintero in #196 - Adds the

nextmv cloud managed-inputcommand tree by @sebastian-quintero in #197 - Support

gurobipy13.0.1 by @sebastian-quintero in #201

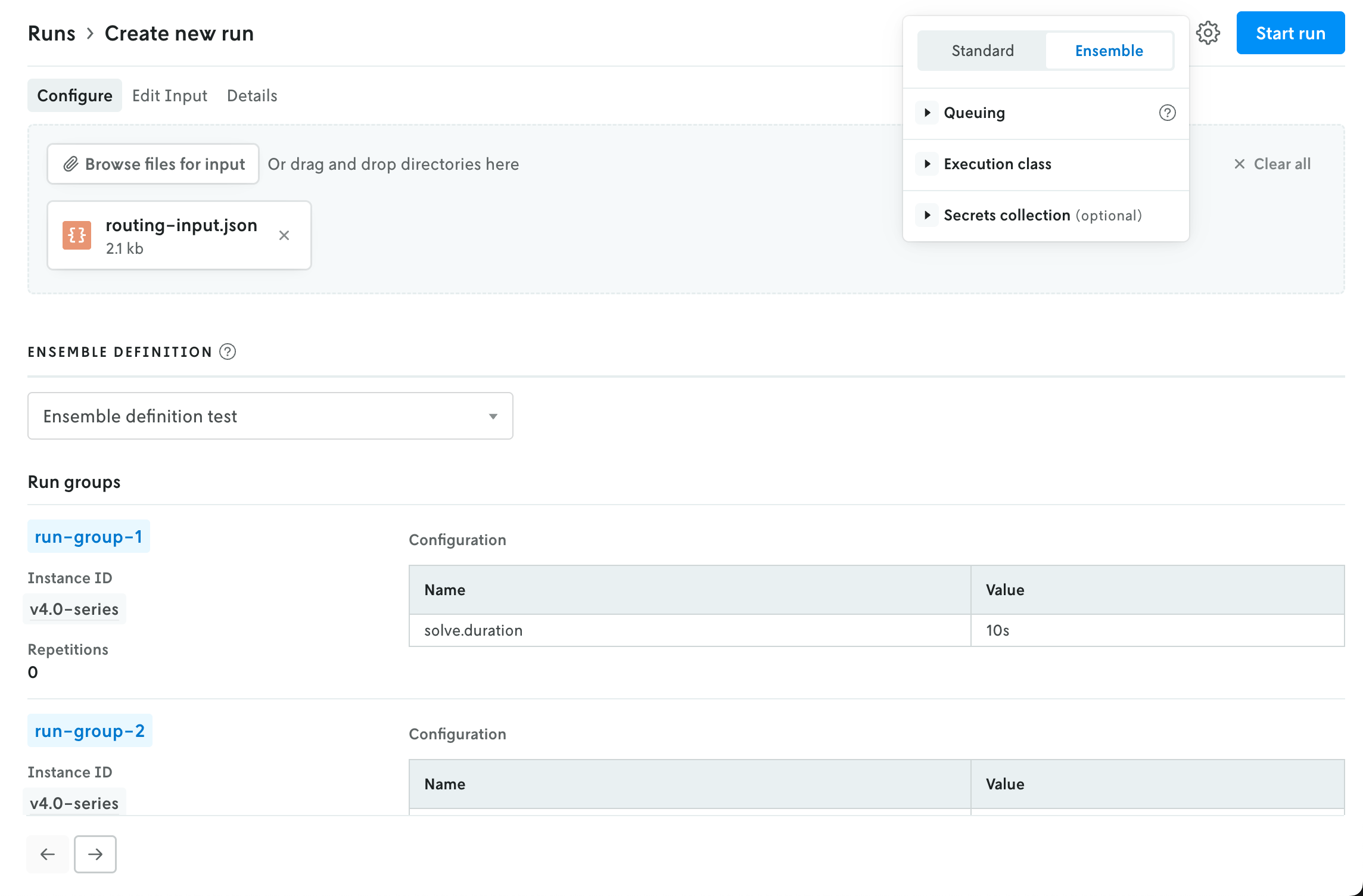

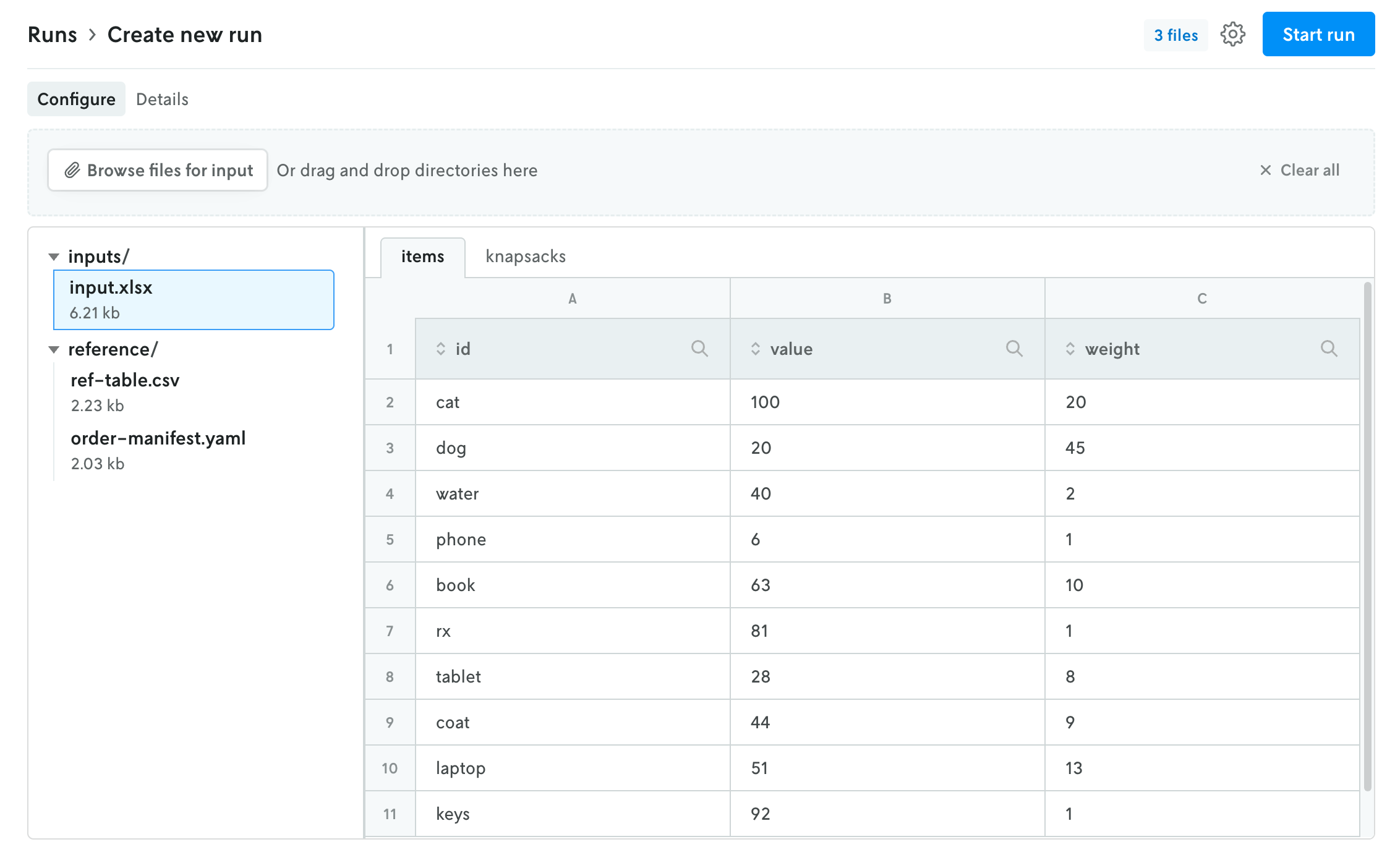

New create run view

January 13, 2026

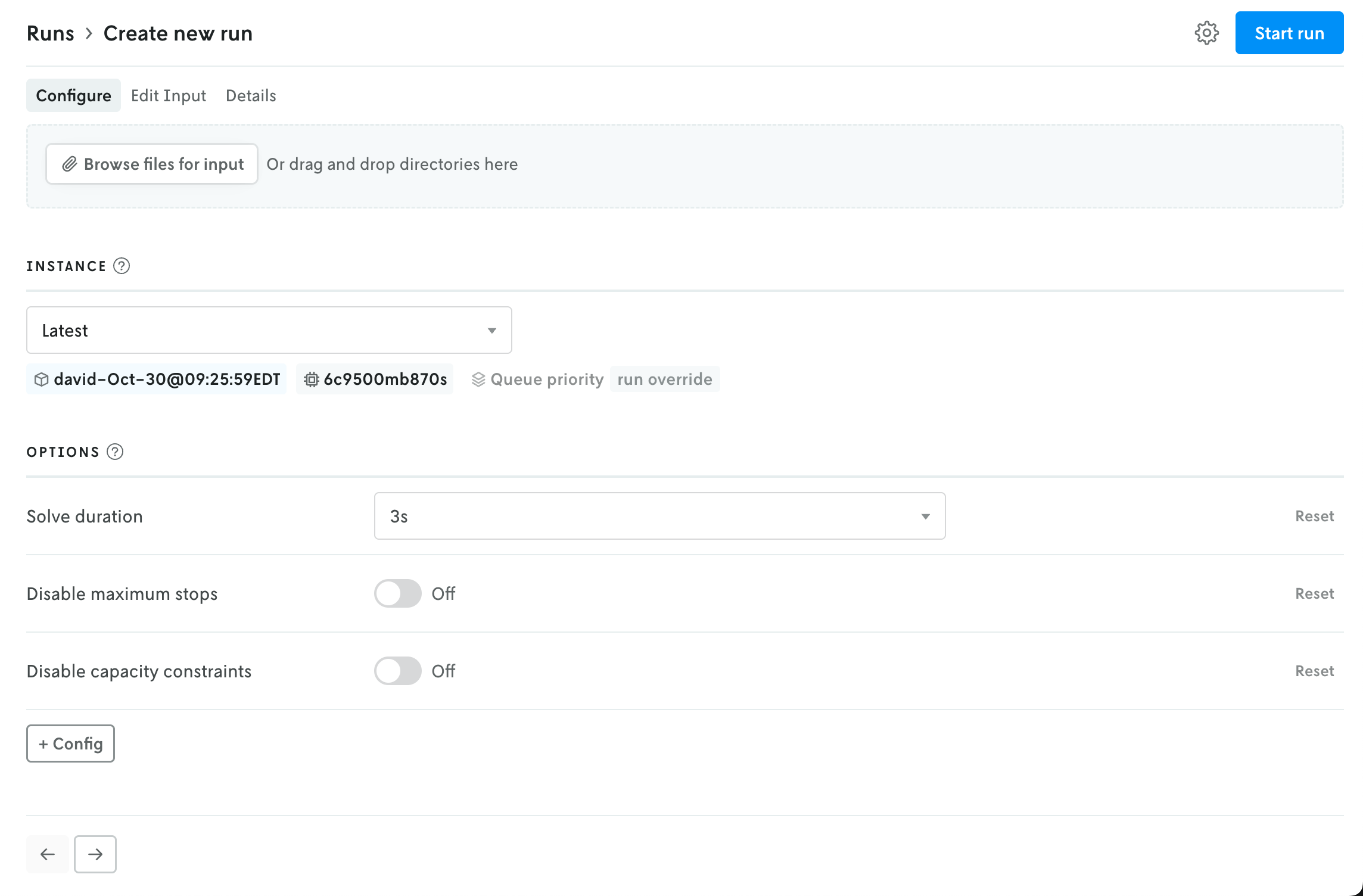

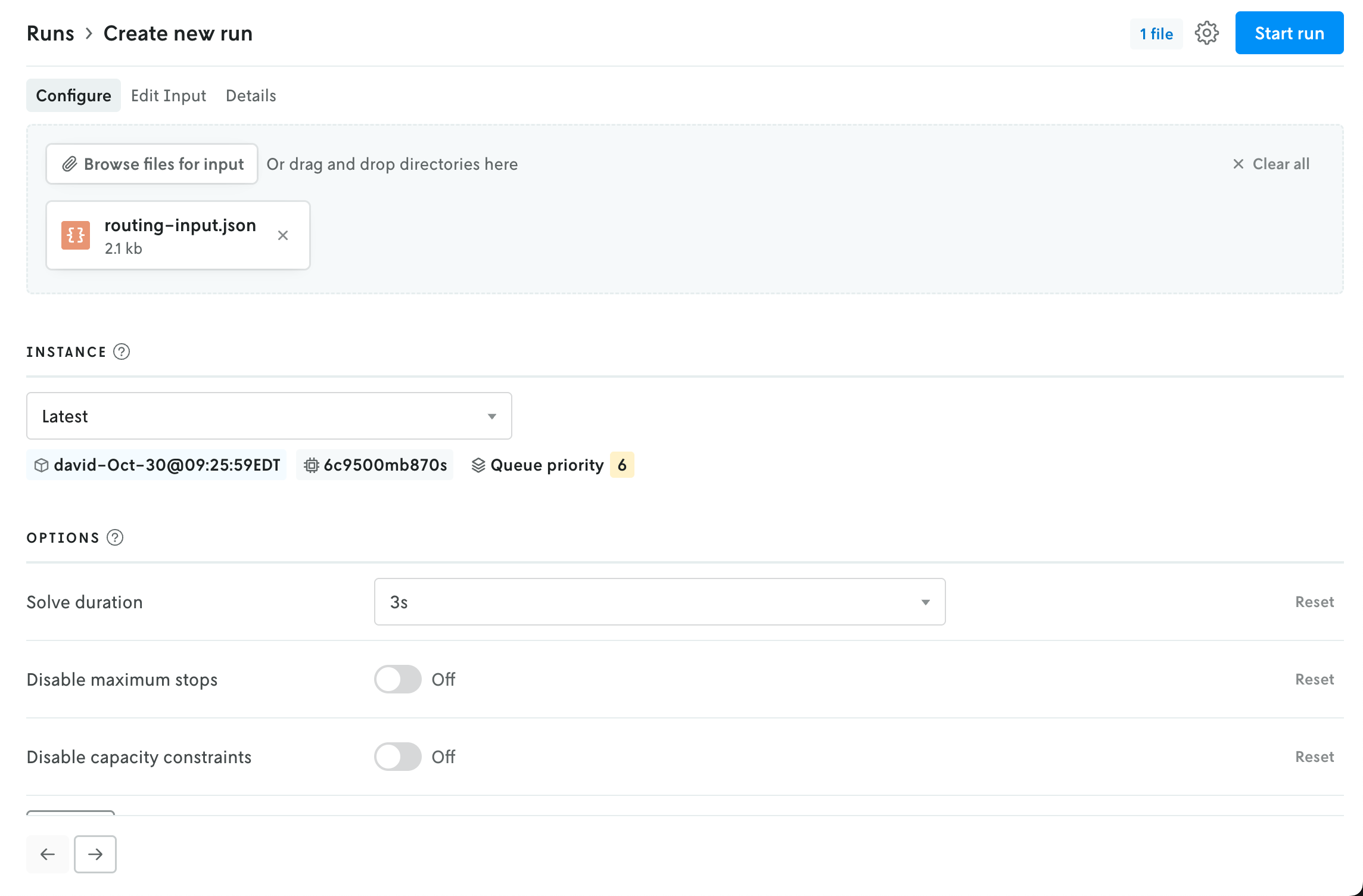

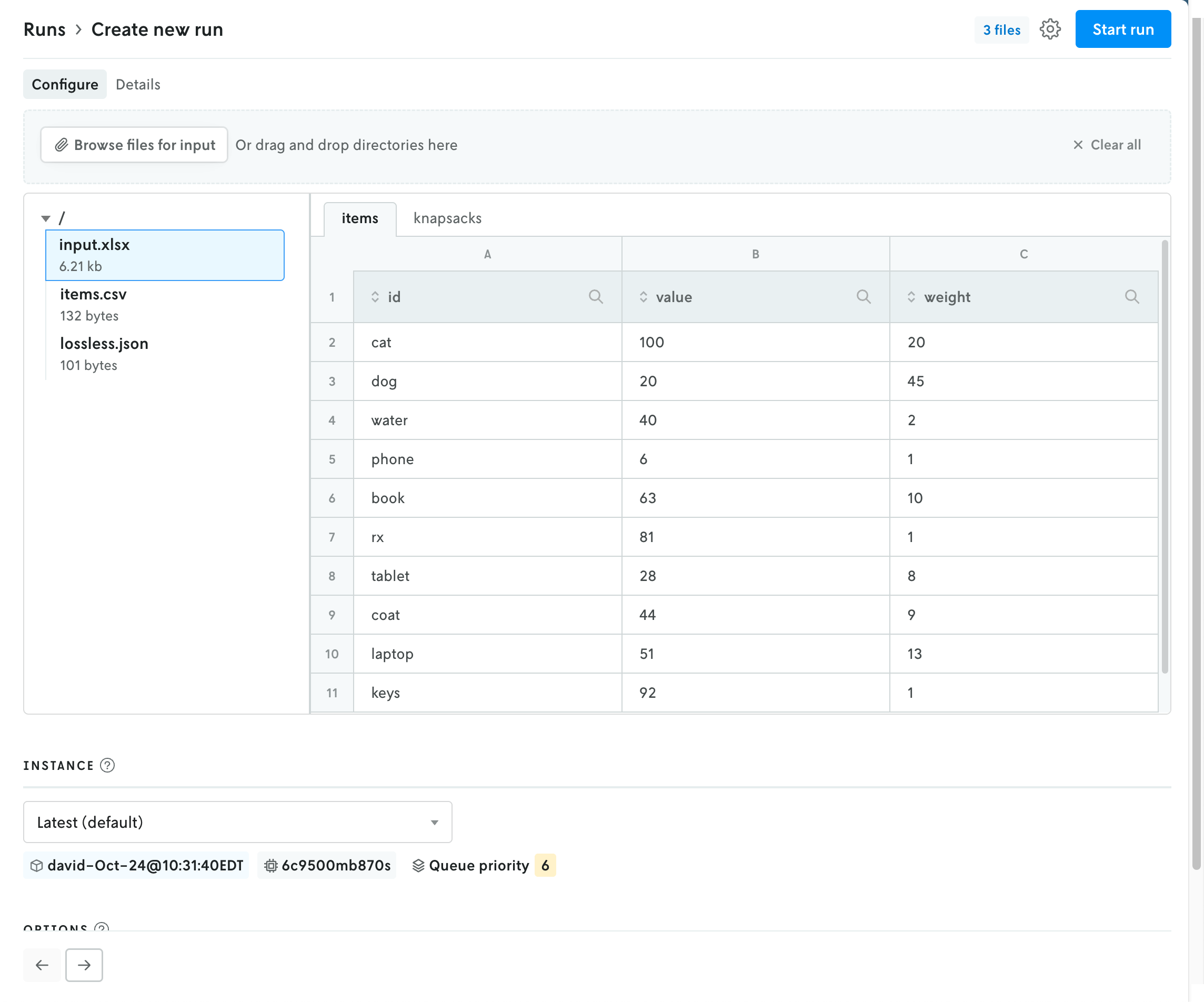

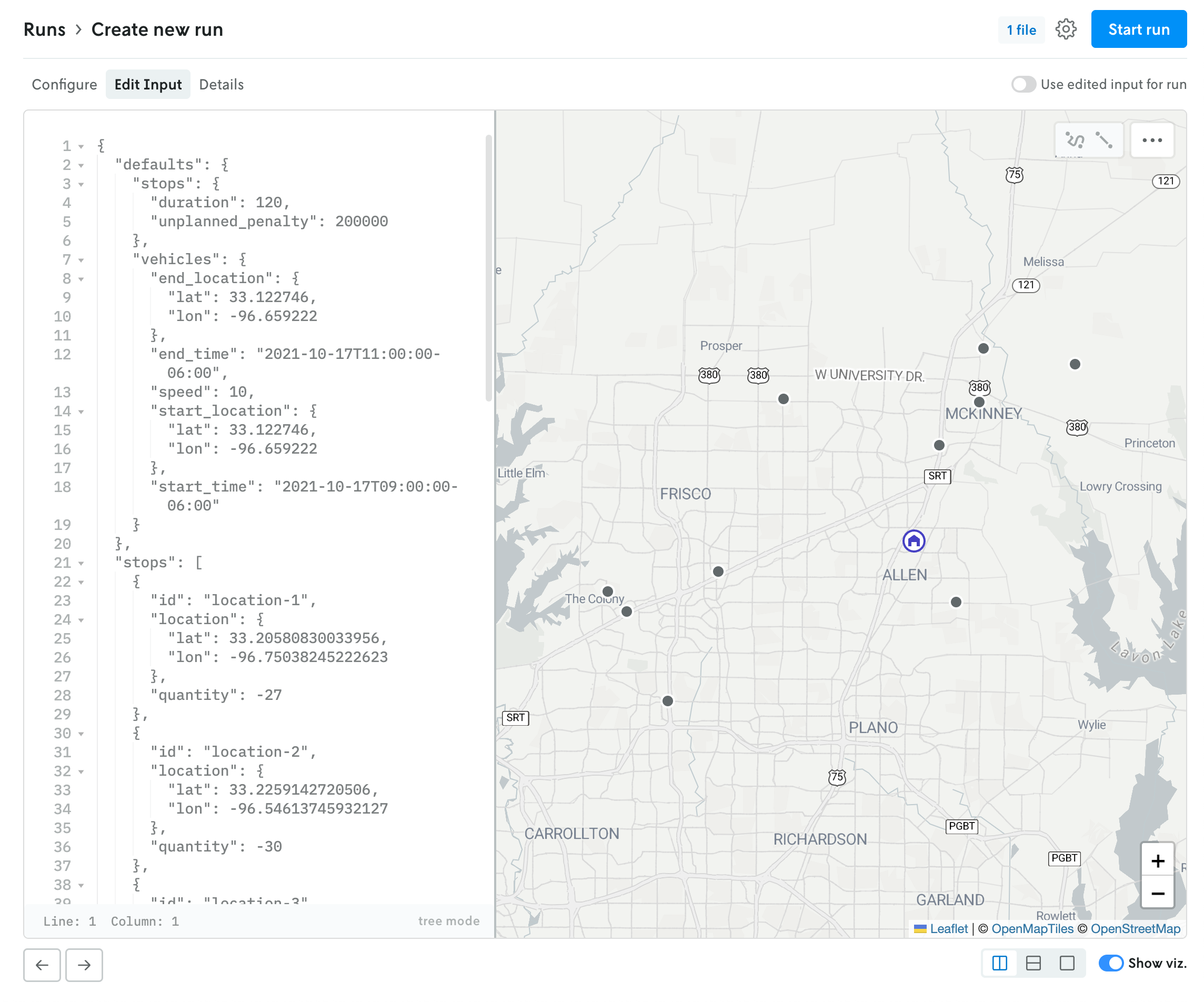

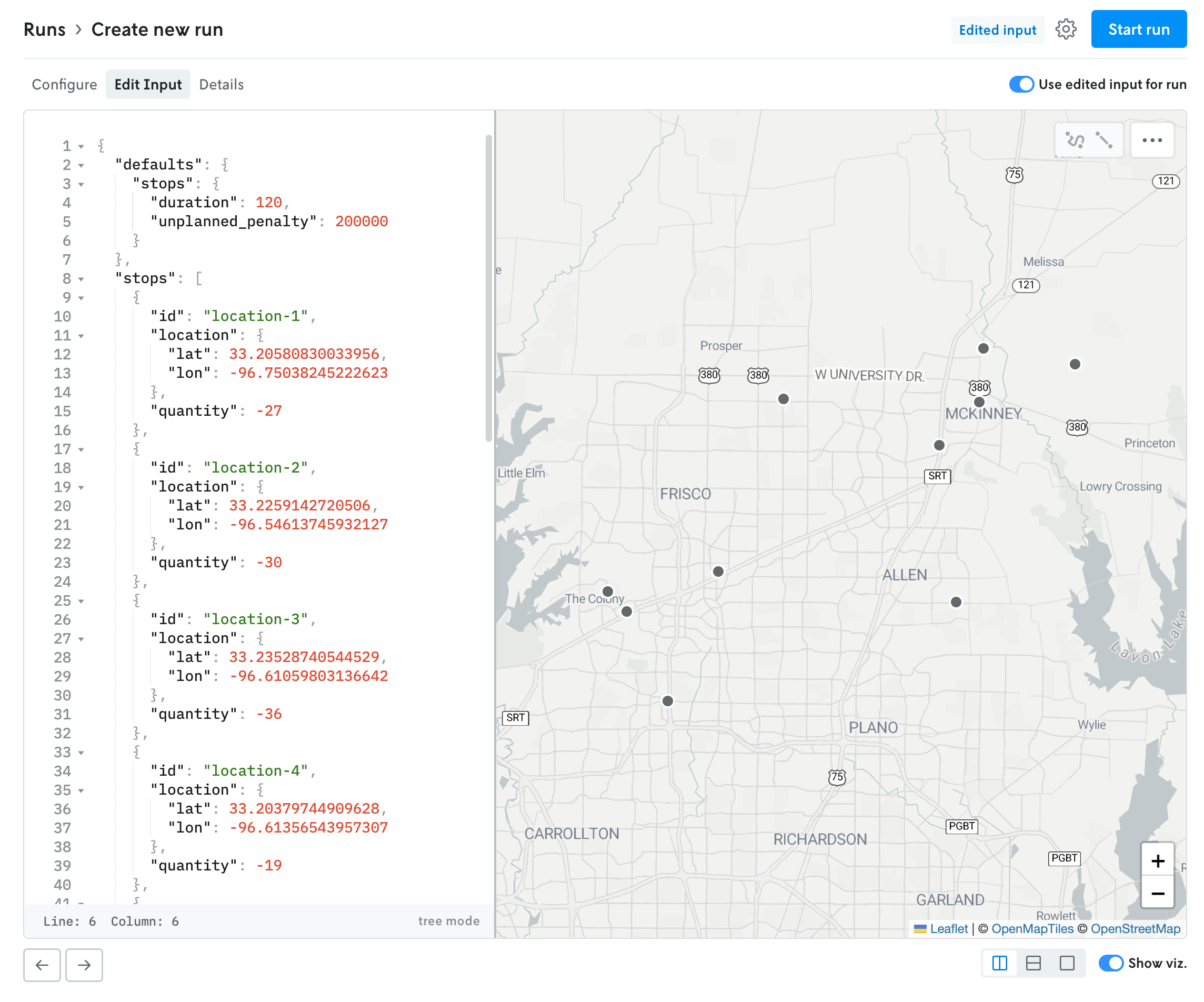

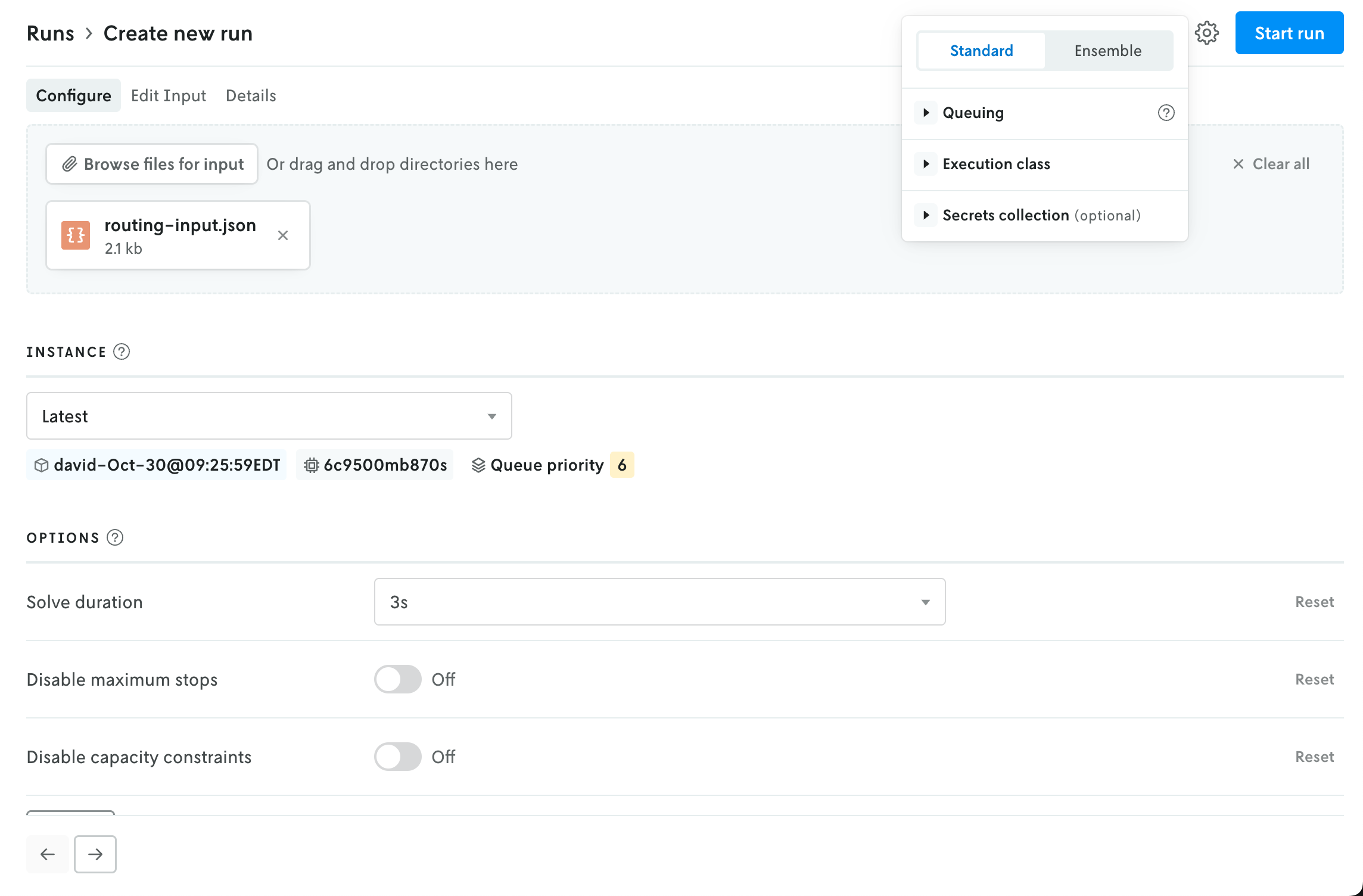

The create run view has been reorganized into a tabbed view interface to better reflect the flow for creating a run and make space for new features in the future.

The initial view provides an uploader for the run input and an interface for selecting an instance (note that if your role is Operator then the UI for selecting an Instance is moved to the advanced settings menu). Depending on the format type for the app, the uploaded file(s) will be represented with either a file icon or shown in the multi-file viewer.

How files appear for apps that use JSON format.

How files appear for apps that use JSON format.

How files appear for apps that use multi-file format.

How files appear for apps that use multi-file format.

Updating the instance will refresh the available options for the run in the space below. Note that the Latest instance will be selected by default if no default instance is set for the app.

If the format type is JSON, the contents of the uploaded file — if they are below the render threshold of 10 MB — will be loaded into the JSON editor in the edit input view. You can click on the Edit Input tab to view the contents of the uploaded file. If you would like to edit the contents, and use this edited input for the run rather than the uploaded file, you can toggle the “Use edited input for run” option. (You can toggle this back off as well, and the run will use the original uploaded file for input.)

JSON run with use input editor for run toggled off.

JSON run with use input editor for run toggled off.

JSON run with use input editor for run toggled on.

JSON run with use input editor for run toggled on.

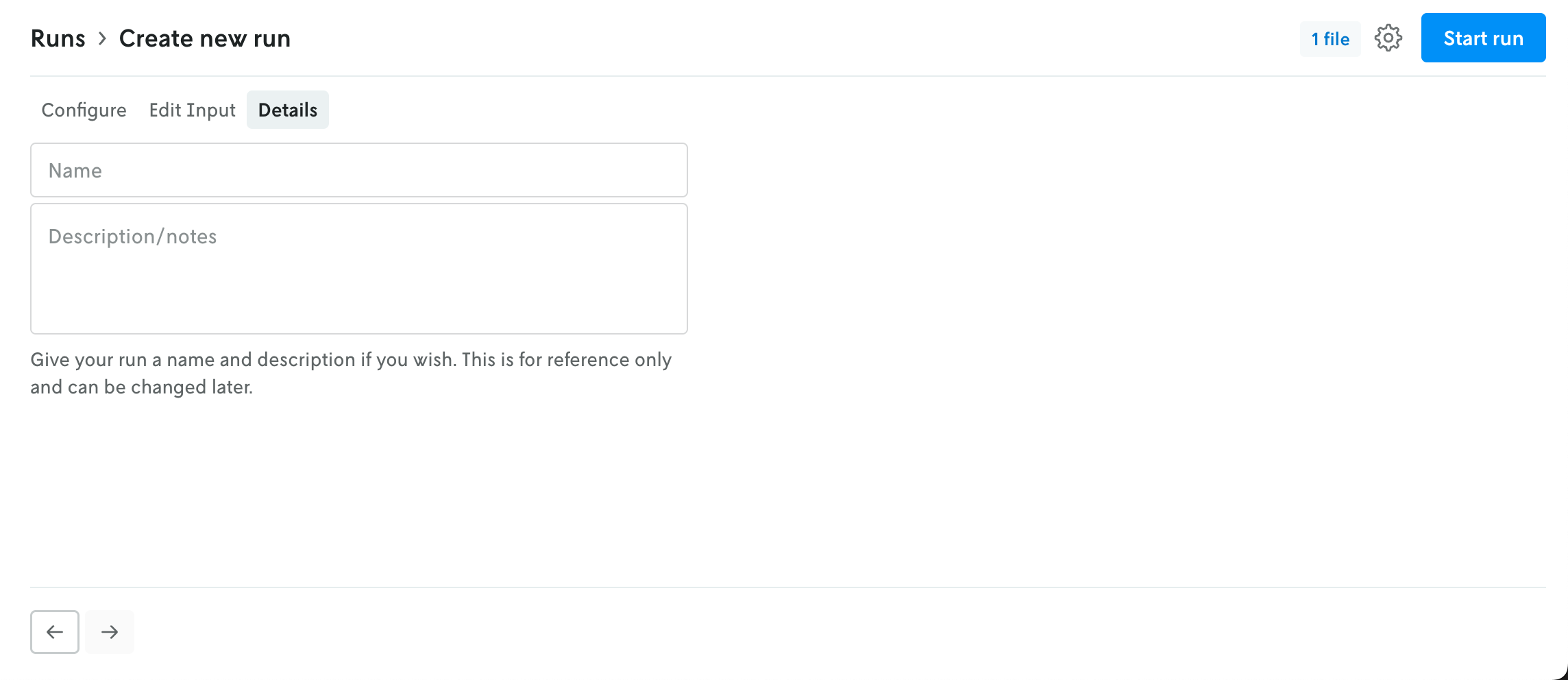

The Details tab allows you to name your run and add a description if you would like. Both of these fields are optional.

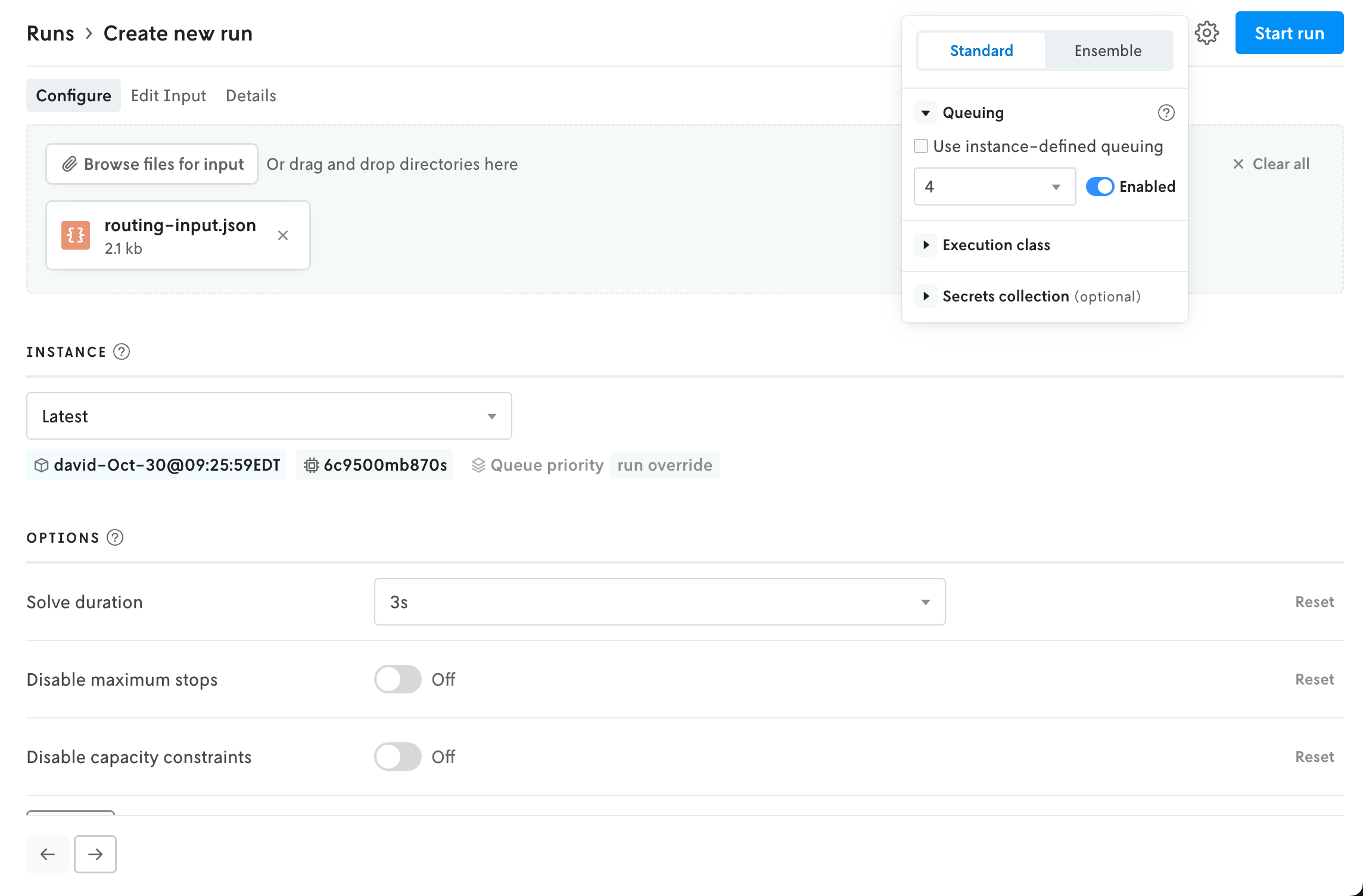

In the upper right, an advanced menu collects the rest of the run settings into a single dropdown. This menu contains the UI for adjusting the run queue settings, selecting a specific execution class for the run, and assigning a secrect collection to the run. Note that any run-level setting will override an instance-level setting (this is reflected in the UI as well).

The advanced menu is also where you select the run type. Standard is the default setting; if you select Ensemble then the UI in the main view will be adjusted to show the options for creating an ensemble run (the instance selector is replaced with a dropdown select menu for ensemble definitions).

nextmv-v0.40.0

January 6, 2026What's Changed

- Disables CLI tool & release v0.40.0 by @sebastian-quintero in #185

nextmv-v0.39.0

December 24, 2025What's Changed

- Adds CLI package by @sebastian-quintero in #181

- Introduces the

configurecommand by @sebastian-quintero in #182 - Adds the

communitycommand tree by @sebastian-quintero in #183 - Release v0.39.0 by @sebastian-quintero in #184

v0.5.0

December 19, 2025What's Changed

- Adds the full end-to-end tutorial, and refactors docs by @sebastian-quintero in #41

Full Changelog: v0.4.1...v0.5.0

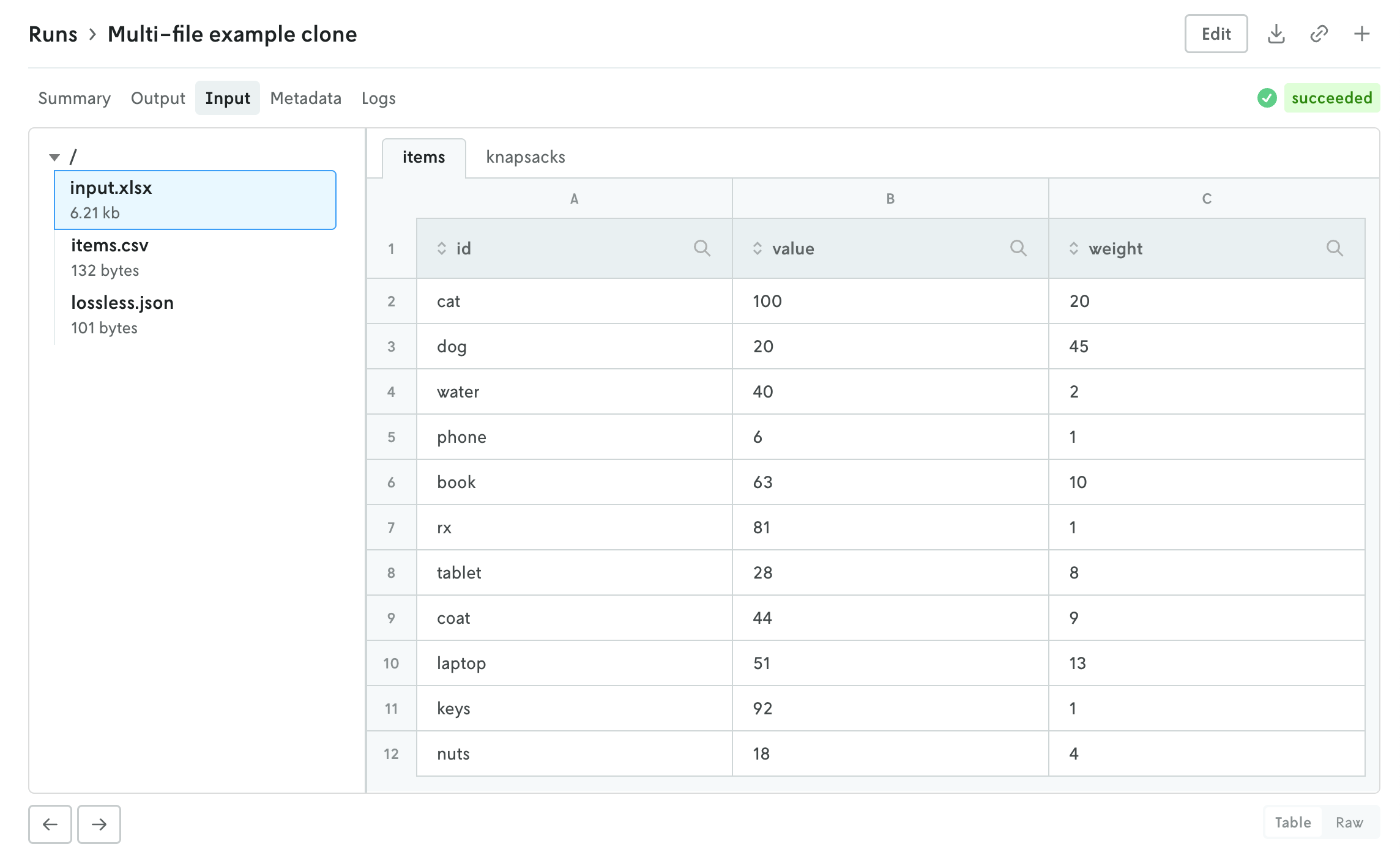

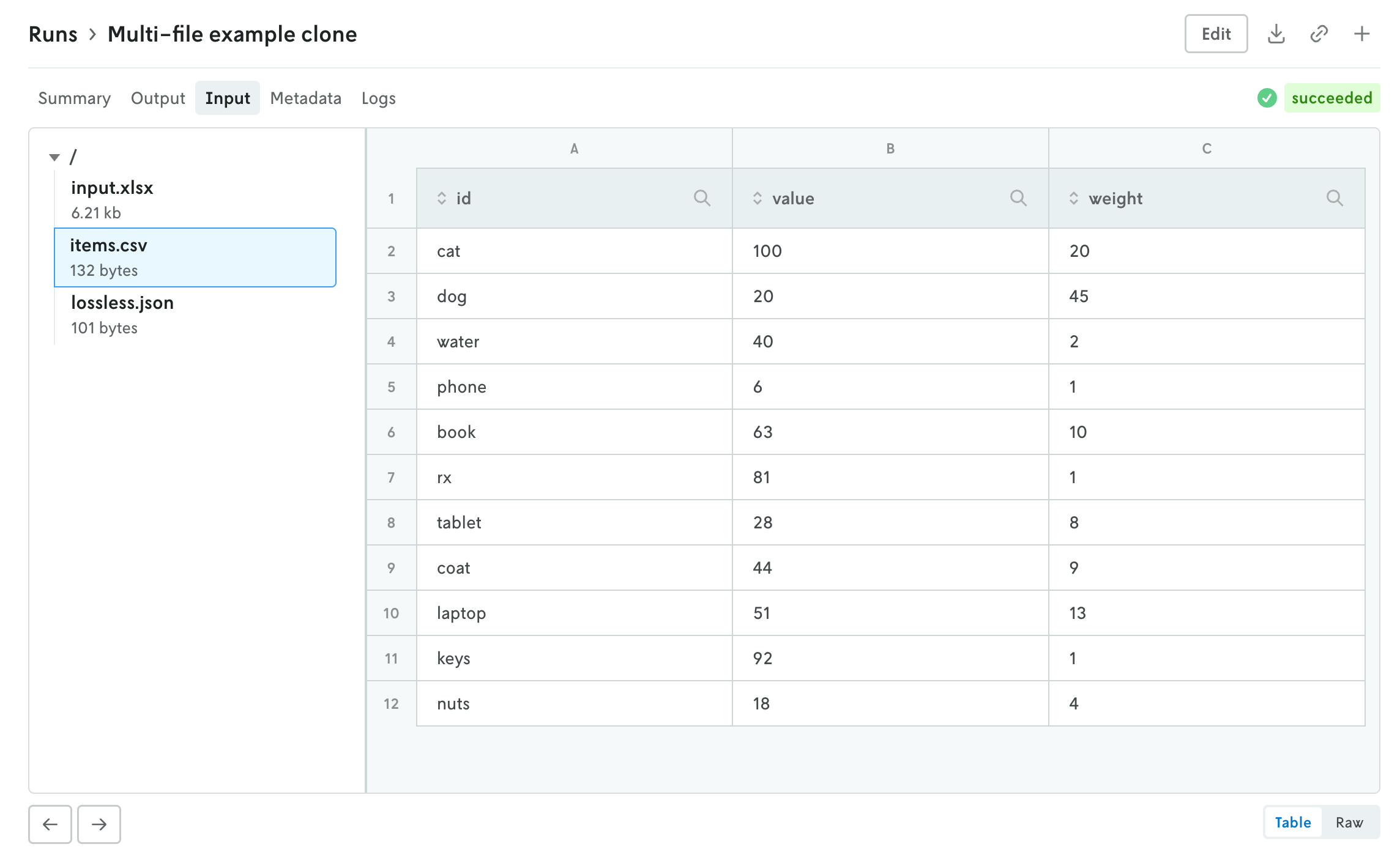

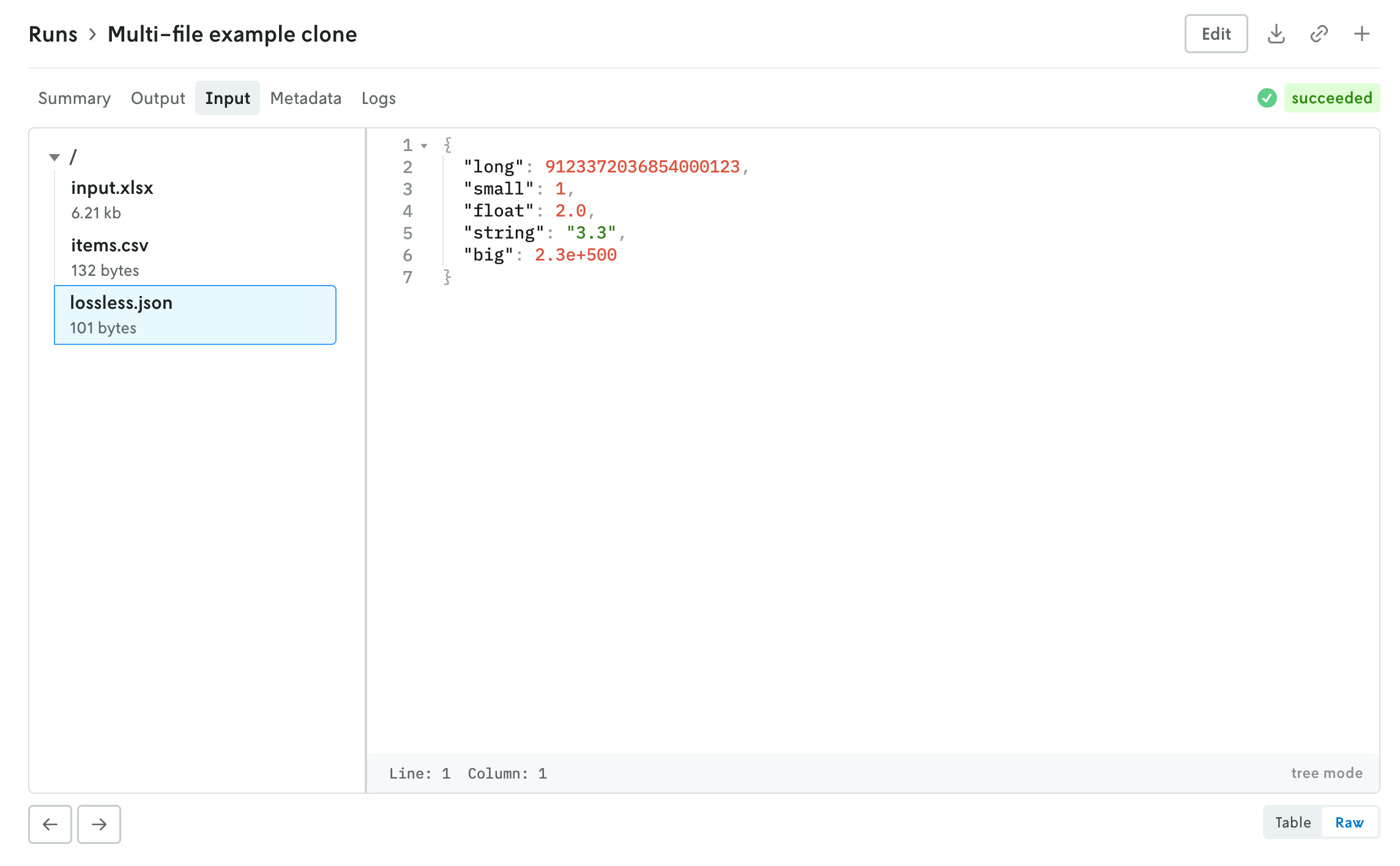

Multi-file viewer

December 4, 2025

Console has a new multi-file viewer that allows you to browse the contents of multi-file input and output. You can view CSV and JSON files in table views or raw views, and you can view XLSX files as well (powered by SheetJS Community Edition). You can view the contents of other types of files as well (.txt, .yaml, etc.).

The left side is the file browser and the right side displays the contents of the file. You can also browse the contents of a multi-file run input on the create run view before you make the run. Note that for multi-file runs you can drag and drop files and directories and the directory structure will be preserved.

The file size threshold for viewing individual files within a multi-file run in Console is 10 MB. Any file over 10 MB will display an interface for downloading the individual file. You can also download the entire input or output file using the standard action buttons in the header of the run details view.

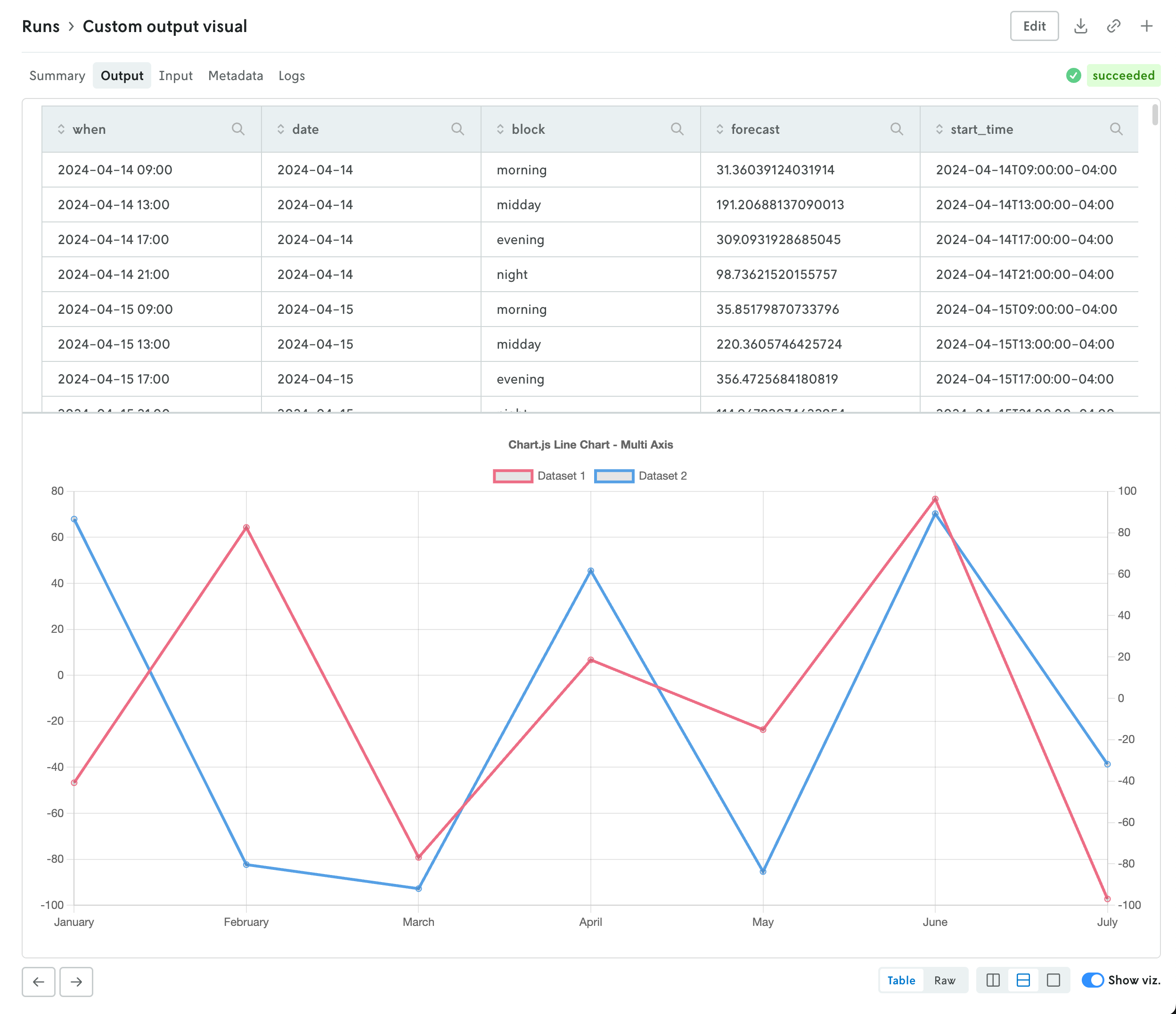

Custom run output visualizations

November 25, 2025

Using custom run visuals, you can now set a custom visual for the run output tab. Before, the output would only have a visual if the output matched set schemas for routing or scheduling apps, now you can set a custom output visual for any type of app.

To assign a custom run visual to the output tab, set the type value to be output-visual:

Your custom visual will have the same controls as the standard routing and scheduling visualizations so you can enable split screen views (or single views) on the data and corresponding visualization. Note that custom visuals will override any standard output visualizations.

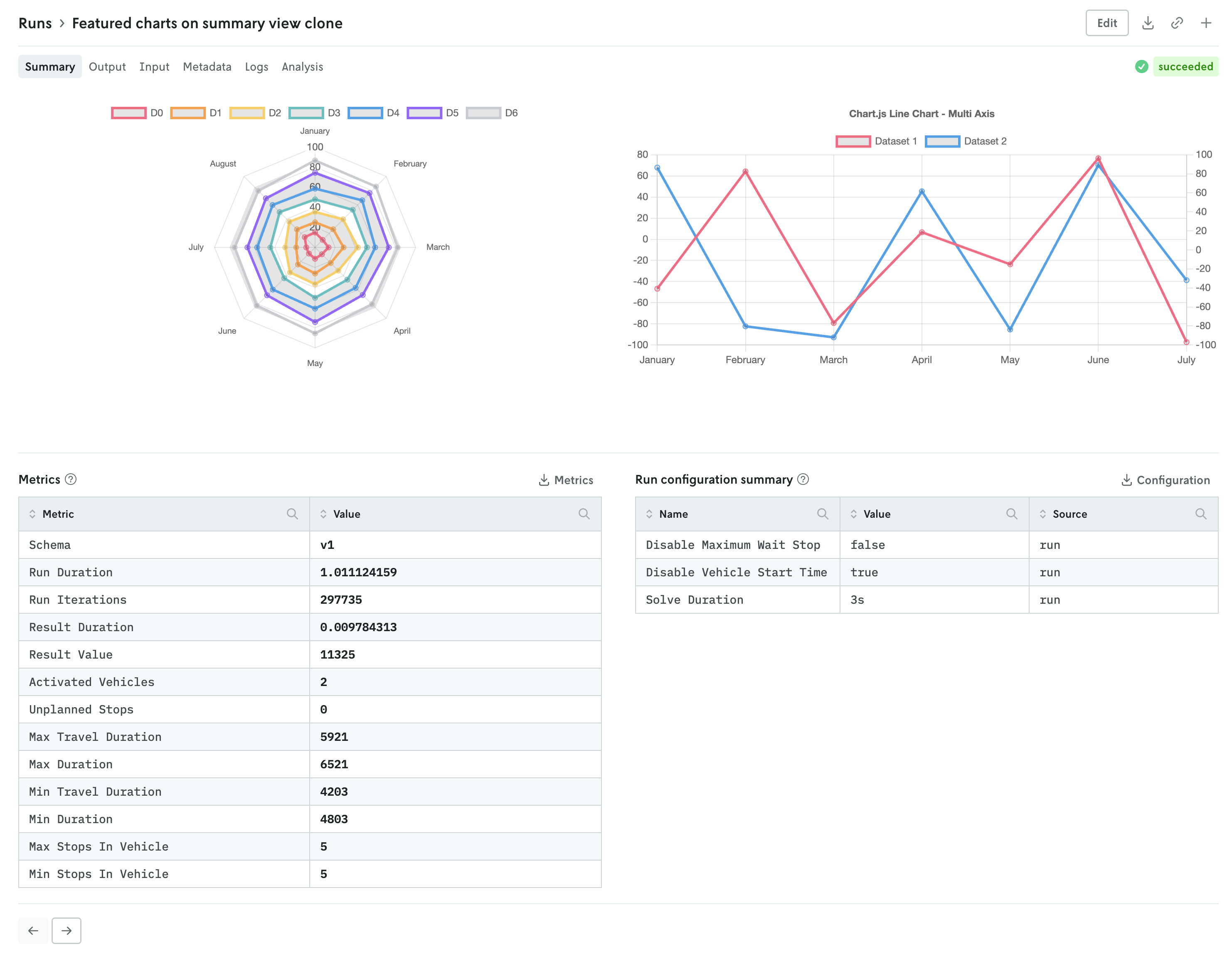

Add custom visuals to the run summary view

November 19, 2025

You can now set specific custom run visuals to appear on the summary view of the run details view. They will appear in a top row above the metrics and run options summary tables.

The custom visuals that appear on the summary view follow the same schema with the addition of a slot property. First, you must specify that the custom run visual should appear in the summary view with the "type": "summary-tab" designation, then you define the order with the slot property. An example of specifying a single custom visual is shown below:

You can specify up to three custom visuals for the run summary view. The slot property can have the values 1, 2, or 3:

1specifies that the visual will be on the left,2specifies that the visual will be on the right if two, in the middle if three, and3specifies that the visual will be on the right.

Note that the visuals will automatically adjust for more narrow screens (e.g. mobile devices).

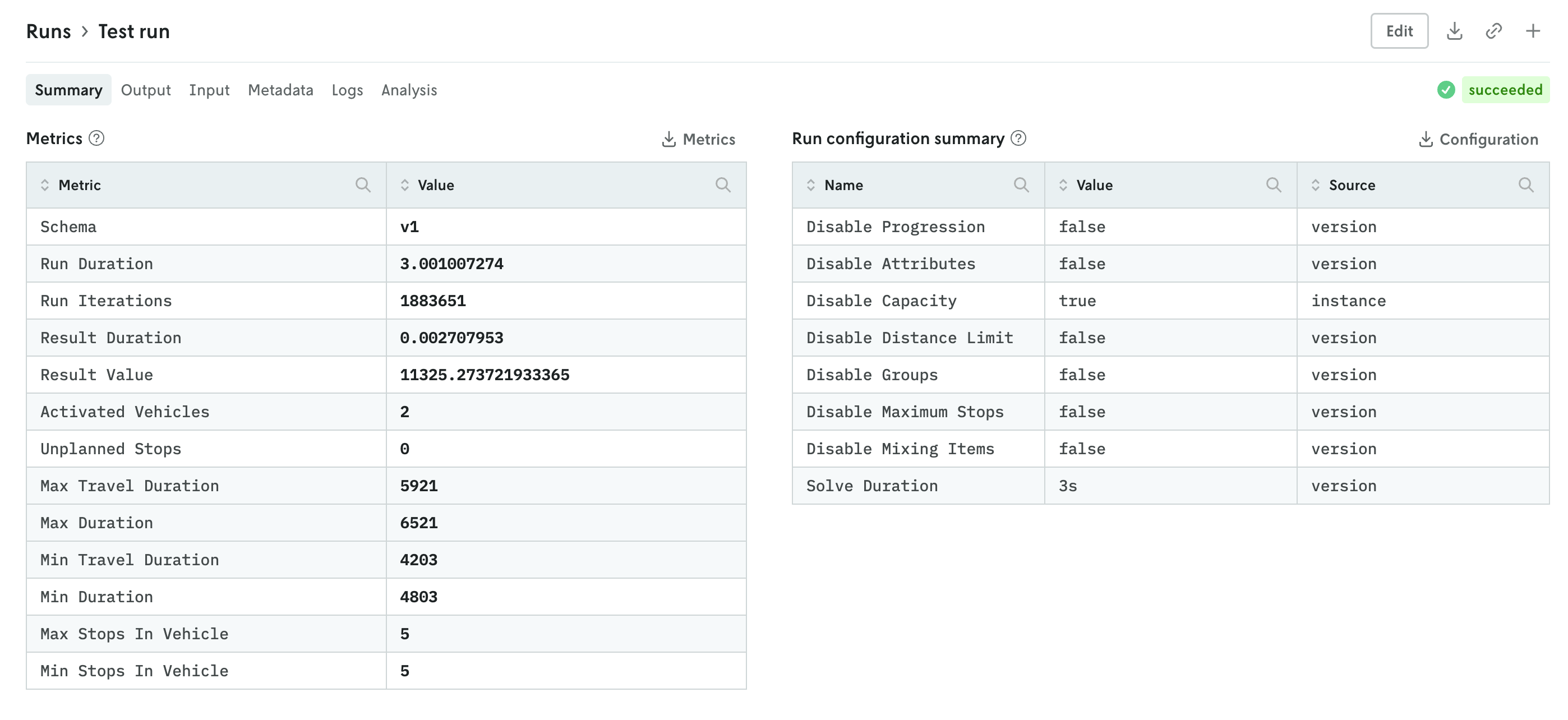

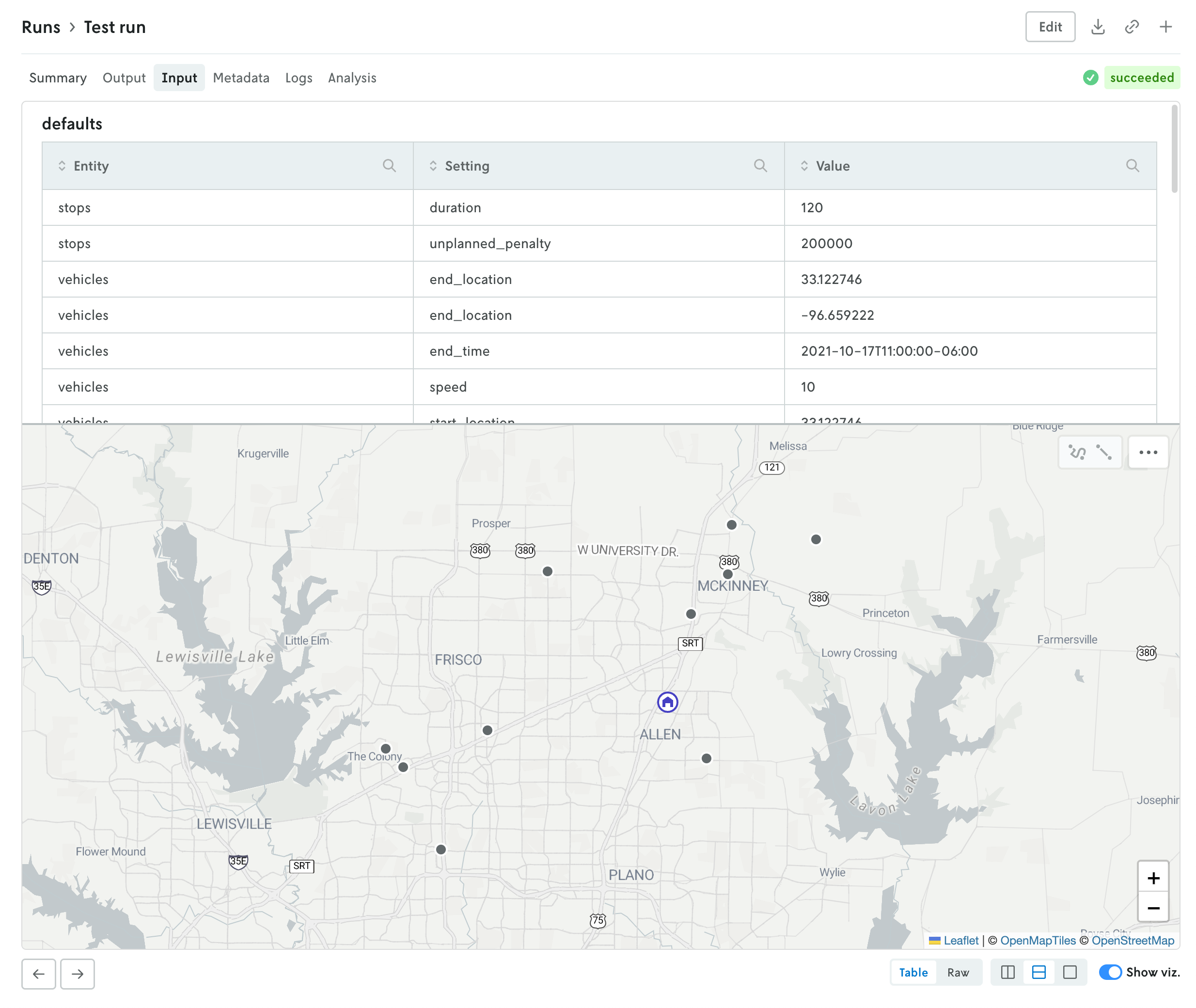

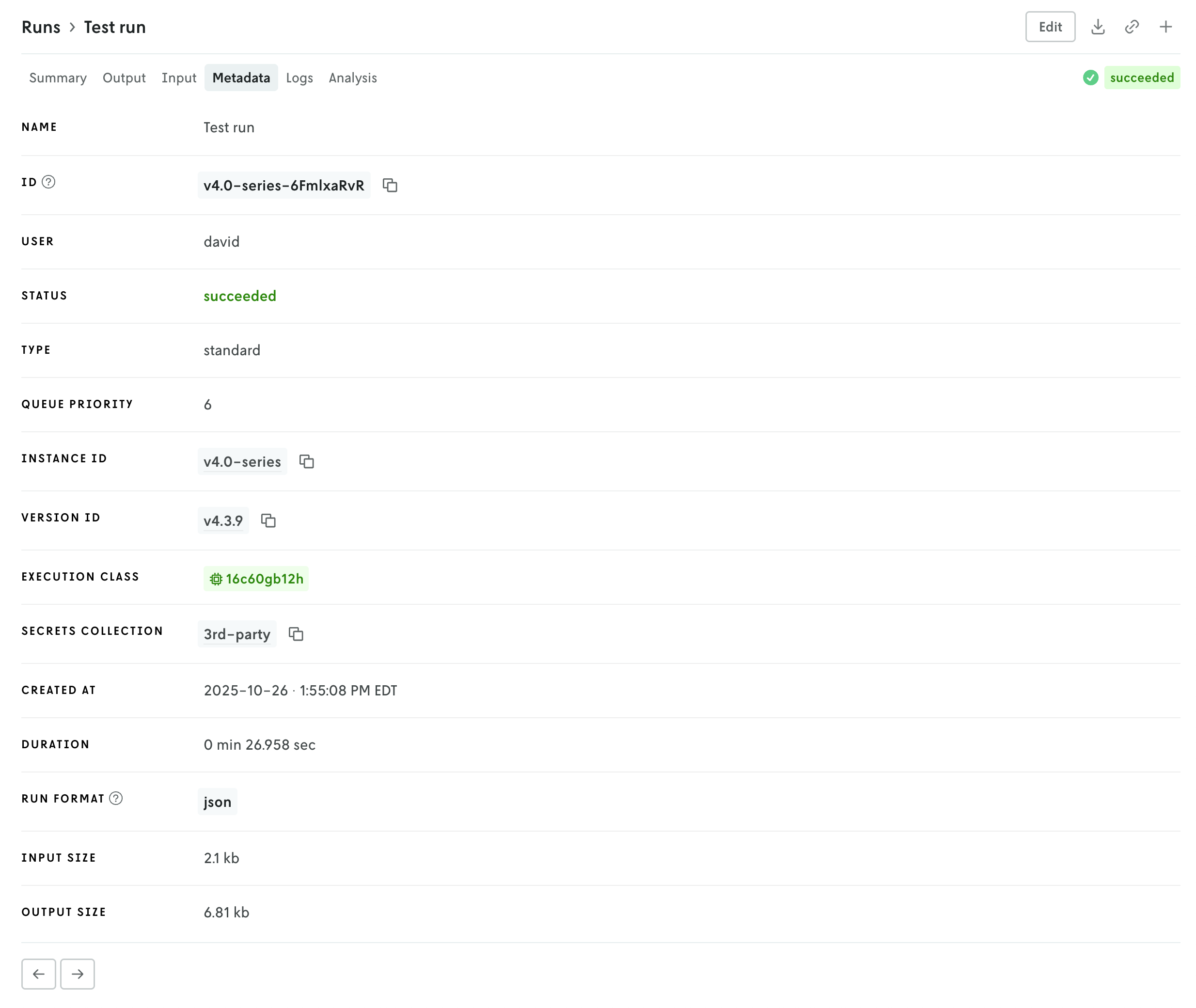

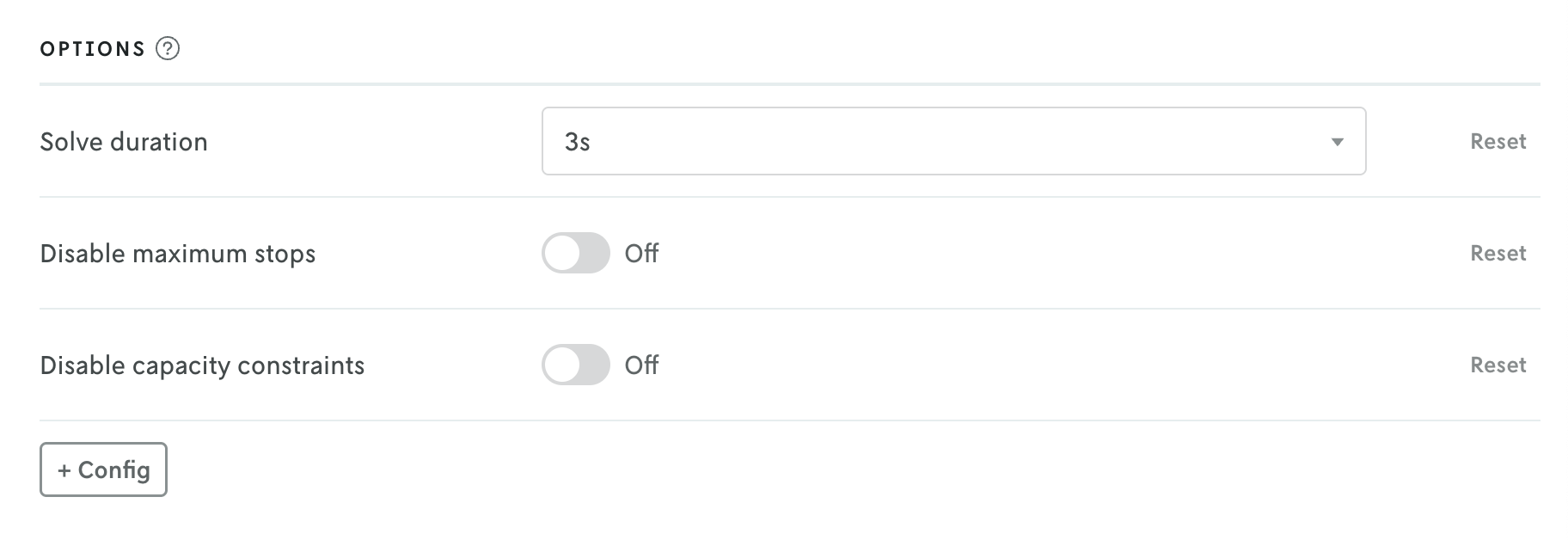

Updated run details views

November 17, 2025

The details view for runs was updated to better align with the data returned and viewing patterns. Summary tables of run metrics and options used are separated into their own view called Summary which is the default landing view when you click on a run. If you are documenting the objective function in your output, then a table summarizing the objective function for the run will be displayed as well.

You can further enhance the summary view by adding your own visuals. See the release note for adding custom visuals to the summary tab for more information.

The input and output tabs have been updated with more intuitive controls for managing table and raw data views and visualizations if they exist as well.

Additional views include a new Metadata view which displays a variety of information related to the run like duration, type, queuing, which version and instance were used, and so forth. The run status has been pulled out of the metadata view and displayed in the header so you can easily view the status of a run no matter which tab view is active.

Then there is a tab for viewing run logs, ensemble analysis details (if it’s an ensemble run), and series data charts if they exist (in the Analysis tab). You can navigate the different views by clicking the tabs in the header or you can use the left and right arrows in the page footer to navigate between the views.

And you can continue to edit the run, download the full input and output files, share views, or create a new run or clone the run you’re viewing using the action buttons in the header.

Set display names for managed options

November 12, 2025

When defining managed options for your model with the app manifest, you now have the ability to set a display name for the option. Before the actual option’s name would be the value that was displayed, but sometimes internal model options do not always translate to the most user-friendly names in a UI.

To define a display name for an option, you just add a display_name property in the ui definition block, like so:

Then, when the option is loaded in Console, the name for the option field will be the display_name value rather than the name value.

You can read more about the display_name option in the UI section of the managed options docs.

Delete shadow and switchback tests

November 10, 2025

You can now delete shadown and switchback tests directly from Console using the UI. On any experiment details view, click the Edit button in the header, then delete in the lower right, and then finally confirm the delete action.

When you delete a shadow test, all of the associated shadow runs are deleted as well. However, baseline runs are left in place as those runs are not tied to the experiment. Deleting a switchback test will not affect any of the runs associated with a switchback test, however, the switchback test information will be removed from those runs.

Note that once the experiment and its associated runs or run data has been deleted they cannot be recovered.